Javascript is a single-threaded synchronous language yet dealing with asynchronous behavior is a huge part of development. How can we as web developers create a truly interactive and dynamic user experience if we don’t consider:

-

How we’re handling functionality where values in one function depend on the execution of values from a prior function

-

The problems each asynchronous pattern present

-

Whether there’s a better tool for the instant

How does Javascript deal with asynchronous concurrent behavior (multiple tasks happening within the same time frame)? There are a handful of patterns that exist to handle concurrency within the language, but which is the best tool for the job? Thunks exist as a means to manage concurrency. How do we use them and what do they bring to the table?

WHAT ARE THUNKS?

Thunks are a pattern used to handle asynchronous behavior, represented as a variation of a callback function. To understand why using thunks as a pattern is necessary, I'm going to first take you down a small rabbit hole covering one of the asynchronous programming problems callbacks functions are trying to solve—the ability to reason about asynchronous logic.

HOW DOES JAVASCRIPT HANDLE ASYNCHRONOUS BEHAVIOR?

The event loop is a model that Javascript employs in order to prioritize how the evaluation of tasks are processed back into the thread of execution, composed of a call stack, microtask and callback queue. While this can be a complex thing to explain, here is a real-world example of how these different pieces work together using the event loop.

Let's use an airport boarding process. There are three tiers of tickets, each with a corresponding line: the first, second, and third tiers are reserved for call stack, microtask, and callback ticket holders, respectively. There is a single pathway onto the plane through the line reserved for call stack ticket holders. The flight attendant announces the plane is ready to board, and everyone with a call stack ticket has priority to board. Once all call stack ticket holders have entered the plane, everyone holding a microtask ticket is asked to step into the call stack line to enter the plane. Once completed, those holding a callback ticket are asked to do the same. The flight attendant looks a little nervous as the call stack ticket holders are entering the plane-- a few people are missing and she continues to check the microtask line. Alas! There were a few microtask ticket holders who arrived late. She halts the callback ticket holders boarding, processes the microtask ticket holders and allows them to use the call stack line to board the plane before resuming the boarding of the remaining callback ticket holders. Once all ticket holders have boarded, the door closes and the plane lifts off.

Perhaps a bit contrived, however this is a high-level example of a concurrent model based on the event loop where JavaScript’s runtime environment ensures a nonblocking I/O by coordinating responses from tasks executed within a certain time frame to give the perception of multiple tasks being performed at once (in parallel).

Now you may be asking:

-

How do we manage the output of these concurrent responses?

-

How does the way we manage our concurrent responses scale as the complexity of those responses increases?

CALLBACKS

The most common solution to managing concurrency are callbacks; functions that take an extra argument, a callback function. The execution of the initial function initiates some asynchronous operation and its’ return value is used in the execution of the callback.

There are a couple of issues that come to head when using callbacks. Inversion of control and reasoning about the code as it becomes more complex are the two that I'm aware of. Inversion of Control is where we implicitly trust third party utilities to use our callbacks in the way we’ve intended, which clearly won’t always happen.

But this is a topic for another blog.

As we begin to introduce new asynchronous dependencies to our application -- the execution of a callback which depends on the return value of a prior asynchronous call -- reasoning about what each piece of code is doing becomes increasingly difficult because callbacks express the execution of asynchronous dependencies by nesting.

#callbackhell

An issue that comes into play with nesting callbacks is how we handle scope. Local variables are only in context within the body of the function they’re declared. Variables move out of context as functions are called within other functions, and move back into scope as functions return. In considering how to deal with scope to manage concurrent requests, a couple of factors to be considered are time and persisting state.

-

How do we handle out-of-scope variables and asynchronous dependencies?

-

How do we persist the data?

So we need a way to express asynchronous code so it can be reasoned about more easily. An evolution of the callback pattern are

thunks.

THUNKS

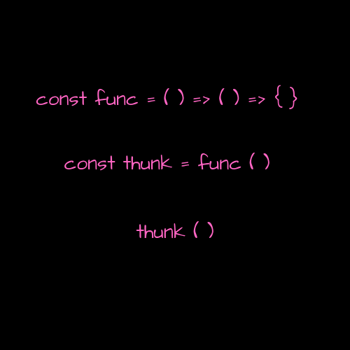

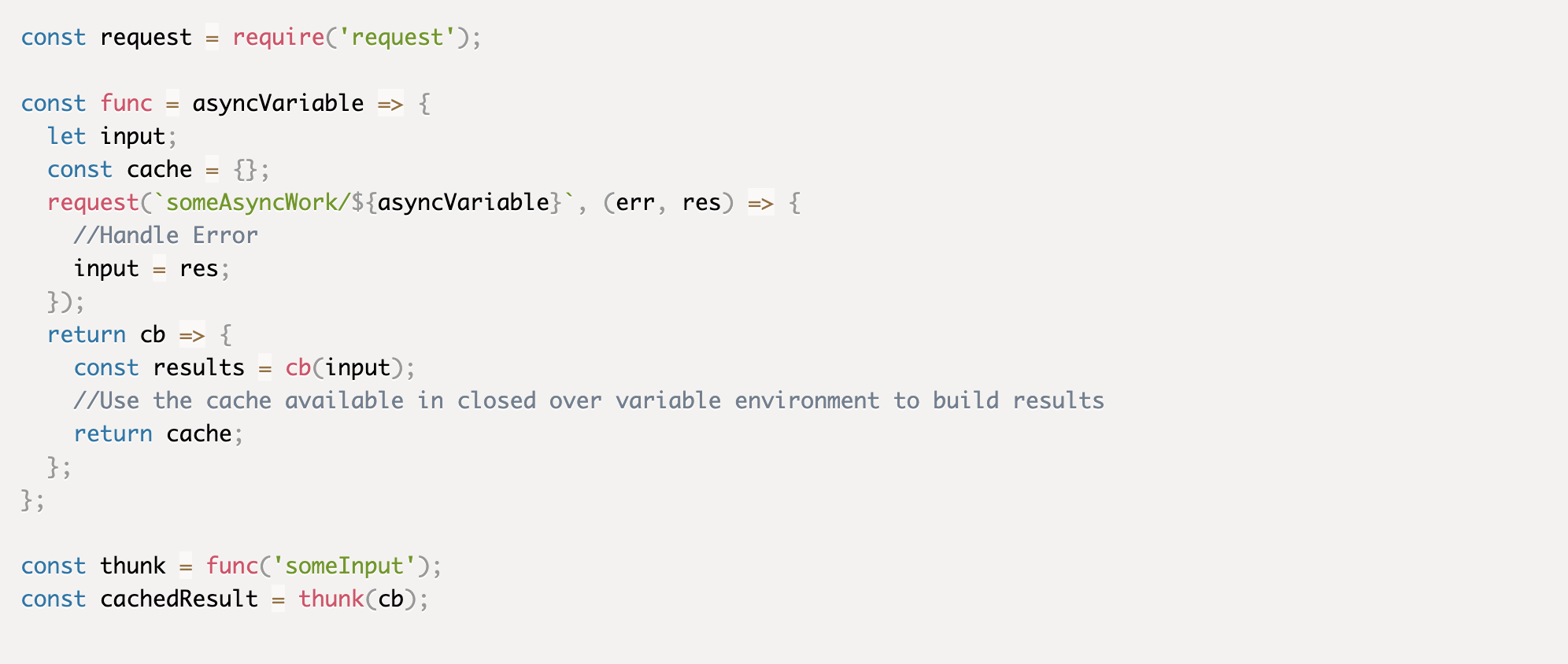

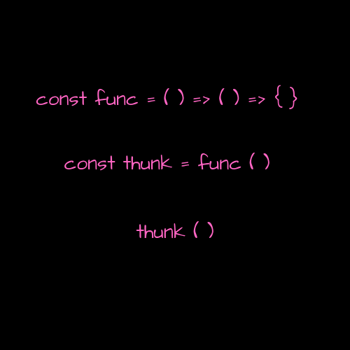

A thunk is a container around some state. Synchronous thunks take no parameters and contain state by making use of closure – it’s a function that returns a function, thus has access to some private state via its lexical environment (closed over variable environment) even when the function is being executed outside of the lexical scope.

Asynchronous thunks are very similar to synchronous thunks. The key difference being that asynchronous thunks take a callback value as a parameter. By defining asynchronous functions in this manner we’re able to:

-

Delay some functionality until a later time

-

Use the state made available by the closed over variable environment

In using thunks to manage concurrency we’re able to take advantage of the lexical environment created by using closure, and create an encapsulation to persist state and compose values together. Our code also becomes slightly more easy to reason about and less clunky.

CONCLUSION

Thunks aren’t the end-all answer. They’re a step in the direction of a better tool for managing concurrency as applications become increasingly complex. Yet, there remain problems that callbacks create and thunks don’t solve, such as inversion of control. But who knows? As we Generate more complex solutions, there most likely is a Promise of a better way to manage concurrency.

RESOURCES