How to build an AI agent?

Learn how to build a real AI agent, not just a chatbot, that can think, act, and adapt across multiple environments. This guide by Will Sentance, Chief AI Officer of Codesmith, breaks down the four-stage agent loop (Input → Reasoning → Action → Output) and shares best practices for logging, error handling, and building resilient multi-runtime systems.

When people think about AI, they often imagine a single model call: you send a prompt to an LLM, and you get a response back. That’s powerful, but it’s not an agent.

An AI agent is more than an API call. It's a system that can reason, act, and adapt across multiple runtimes. It’s about chaining logic, managing actions, handling errors, and building workflows that can actually execute in the real world.

In this article, I’ll break down how to build an AI agent from the ground up, based on the principles I use in practice.

Every agent follows the same four-stage loop:

This flow repeats. An agent may reason, act, and observe several times before it decides it has reached a final answer.

When you write plain JavaScript or Python, debugging is simple: console.log or print tells you what’s going on. With an agent, that doesn’t work.

Here’s why: agents often run across five or six different runtimes. Some parts execute locally, others in the cloud, others inside a third-party service. Without proper logging, you have no visibility into what’s happening.

Agents will fail. APIs timeout, models hallucinate, and services crash. The goal is not to prevent failure, but to design for resilience.

Error handling strategies for agents:

When an agent fails, it should degrade gracefully instead of collapsing.

I’m often asked: should you build agents in Python or JavaScript?

In most cases, Python is the right default, but don’t ignore JS if your agent belongs in the browser.

Building blocks of a hands-on agent

To make this practical, here are the components you need to wire up:

The LLM is the reasoning layer. It decides what action to take next. Think of it as the brain, not the whole body.

Agents need “hands” to interact with the world. Tools can be:

After taking an action, the agent must “see” what happened. For example:

Integrate logging at every step and wrap tool calls with try/except or equivalent. Every action should either return a result or a clear error message.

Finally, the agent delivers a result, an answer, a file, or an automated step.

You can build all of this from scratch, but frameworks speed things up:

These tools don’t replace the fundamentals, but they make complex agents easier to manage.

Once you’ve built a working prototype, you’ll want to extend it. Here are some directions:

.png)

This walkthrough is based on Codesmith’s recent workshop, “Build Your First AI Agent.” It’s not meant to replace the workshop, but instead to give you a clear, step-by-step look at the overall workflow and logic that Will Sentence follows. If you haven’t already, we highly encourage you to check out the full session on YouTube and code along with Will! After all, the best way to learn this stuff is by going hands on!

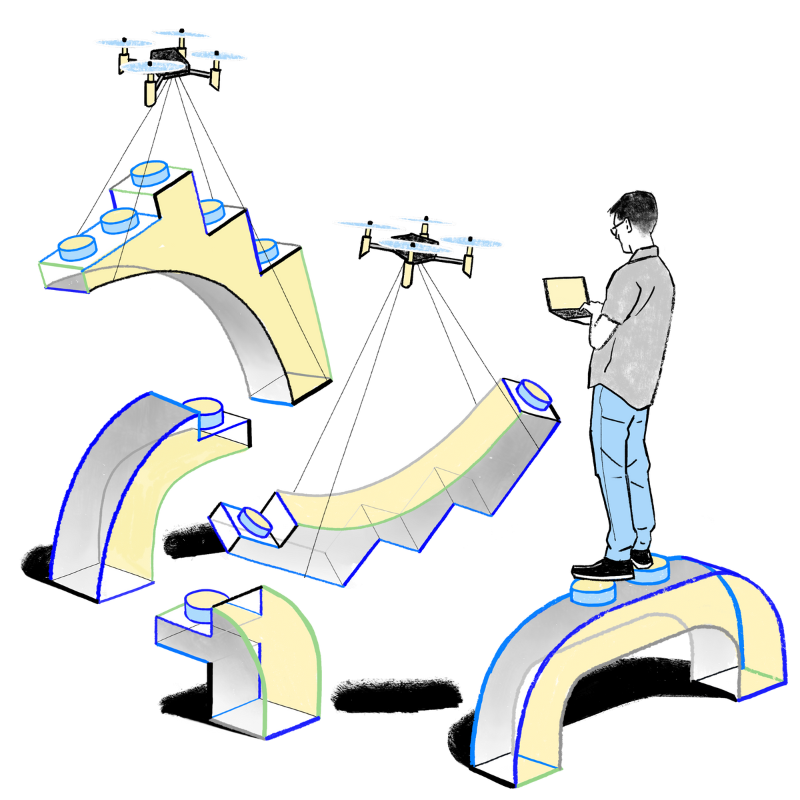

In this walkthrough, we’re going to trace the full journey of how an AI agent can take a screenshot, extract all of the meaningful information from it using OpenAI’s API, and then automatically create a Google Calendar event.

By the end, you should have a high-level understanding of how each of the different components (Automator, Python, OpenAI, and Google Apps Script) all fit together to form an end-to-end AI-powered automation!

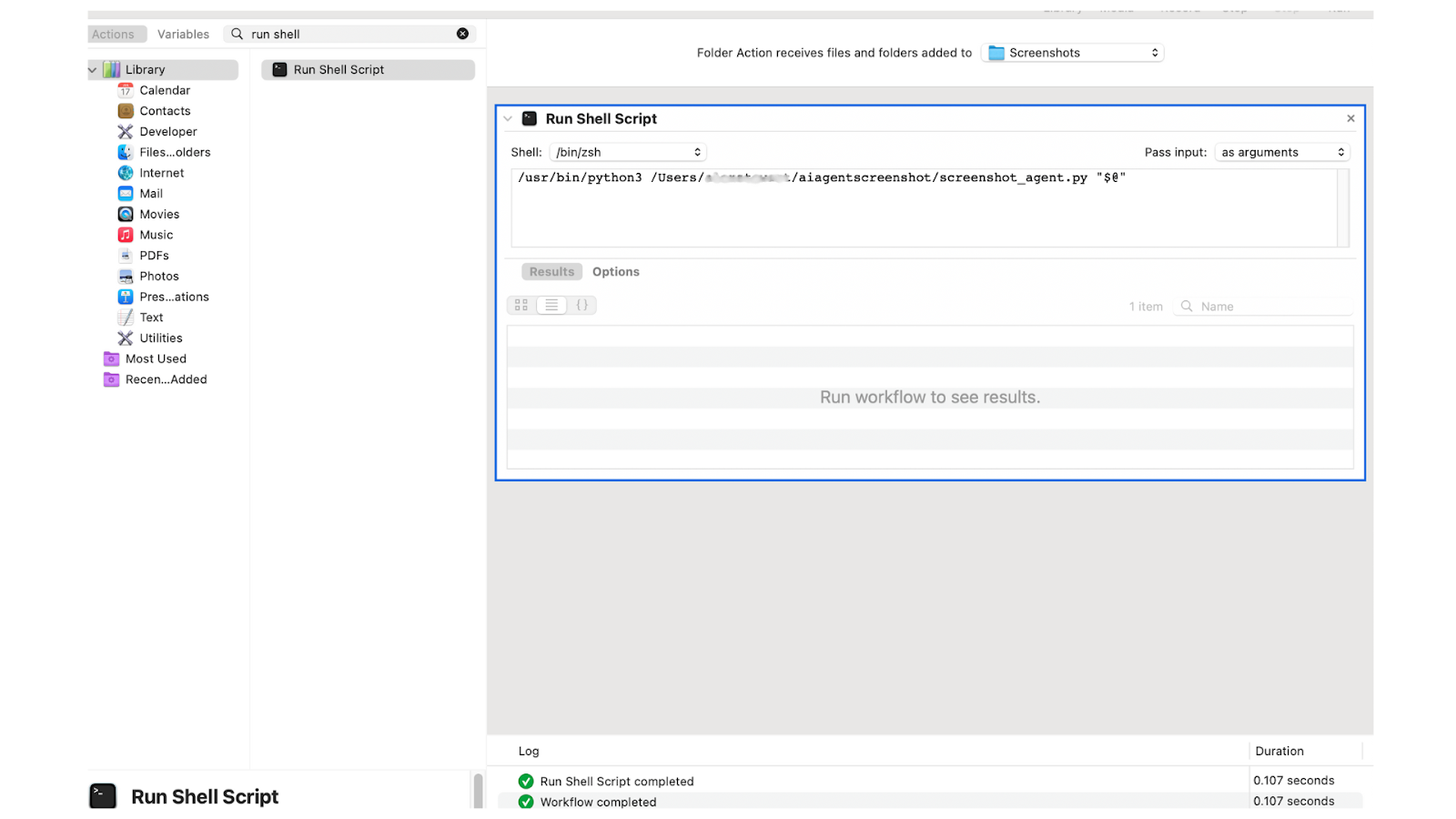

Setting up Automator: First, we’ll start by creating an Automator workflow on your computer that runs a Python script whenever a new screenshot is taken and added to a folder.

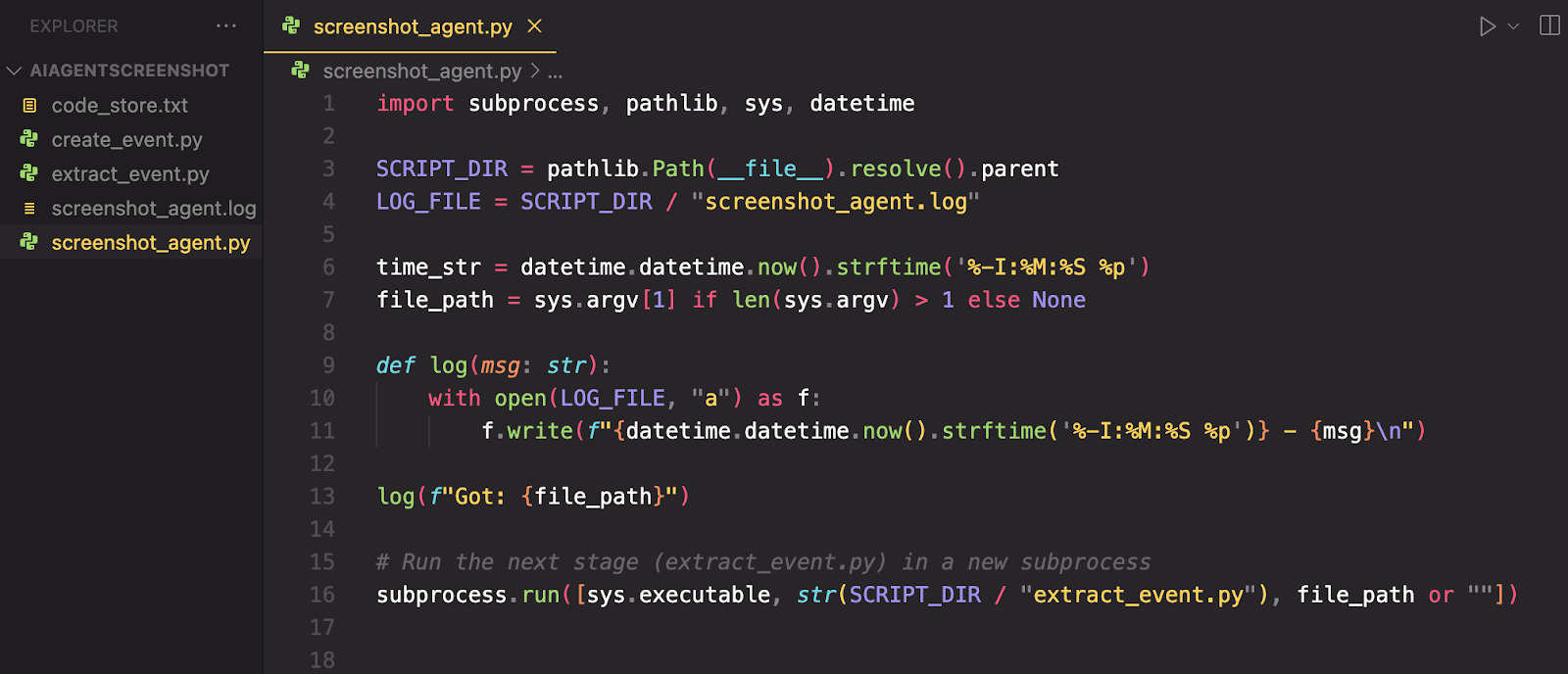

Building the Screenshot Agent: Next, in VS Code, we’ll write a Python script (screenshot_agent.py) that logs each new screenshot and then triggers the next step in the workflow (line 16).

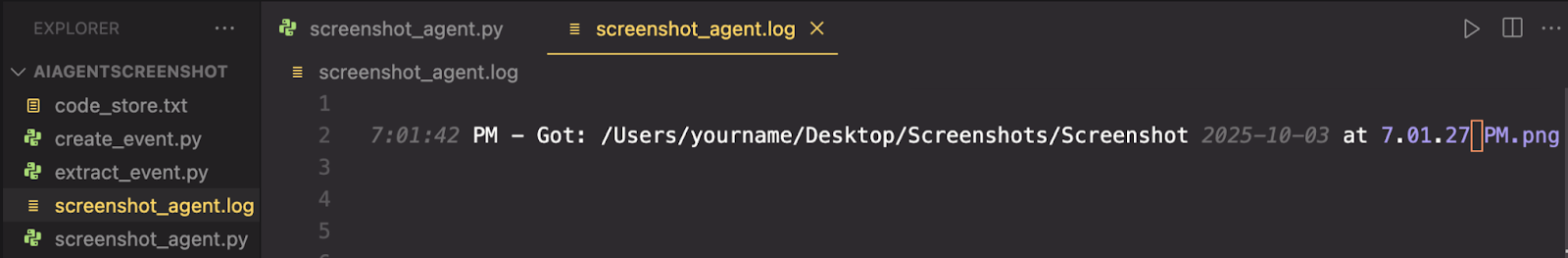

After we take a test-screenshot, we can see our message logged in screenshot_agent.log! This log file will get much more detailed as we add more steps to our workflow and log as we go but, for now, we just see a log of our first screenshot.

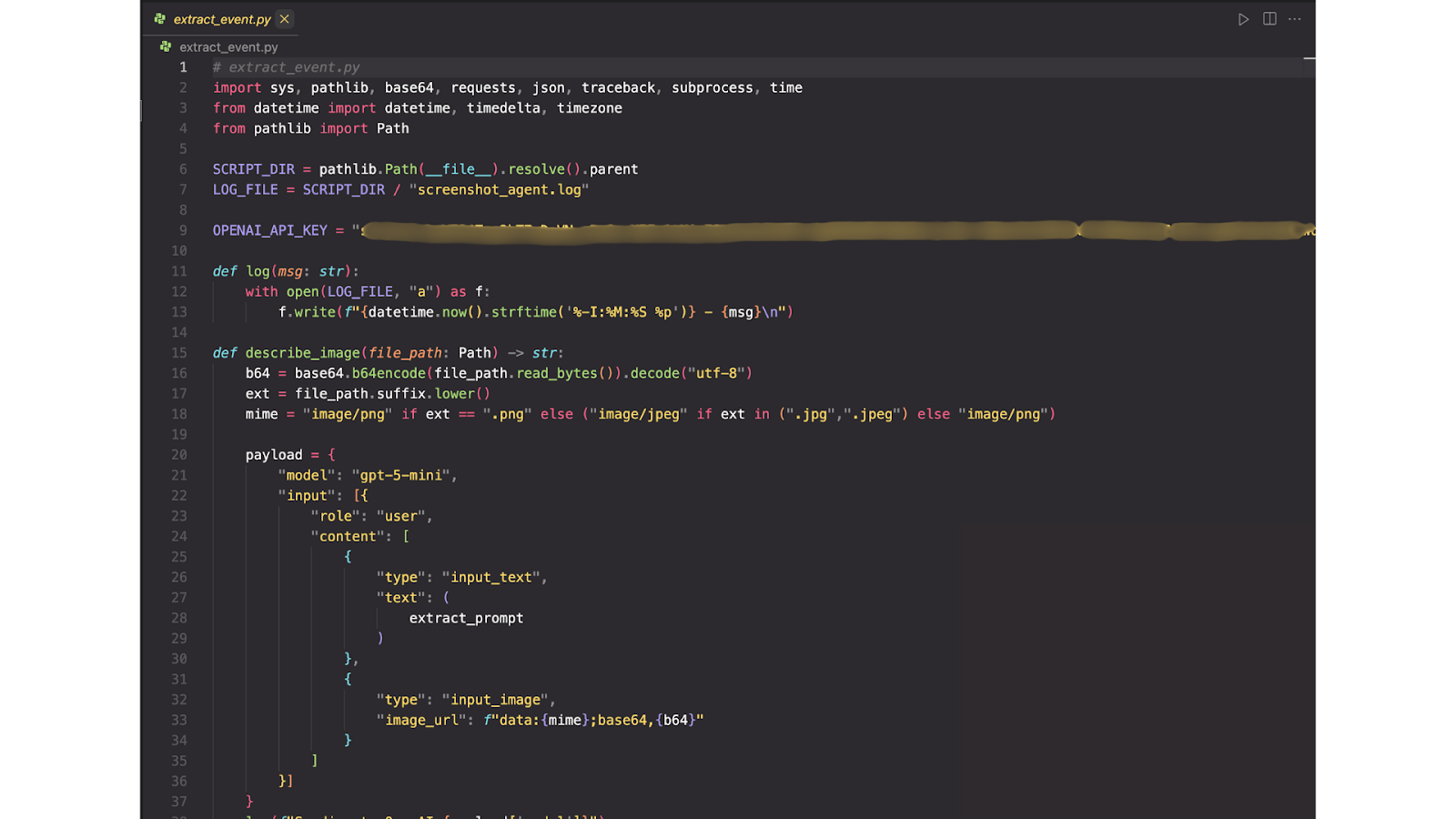

Describing the Image with OpenAI: Of course, our app needs to do much more than just log a screenshot! We’ll need to send the screenshot to the OpenAI API. Note this image only shows part of the script; the full file also includes the API request logic, error handling, and response parsing that turn the model’s output into usable event data. Also Note: You should ALWAYS save your API keys in a save environment like a .env file but, for clarity’s sake, we hard-coded it into this file.

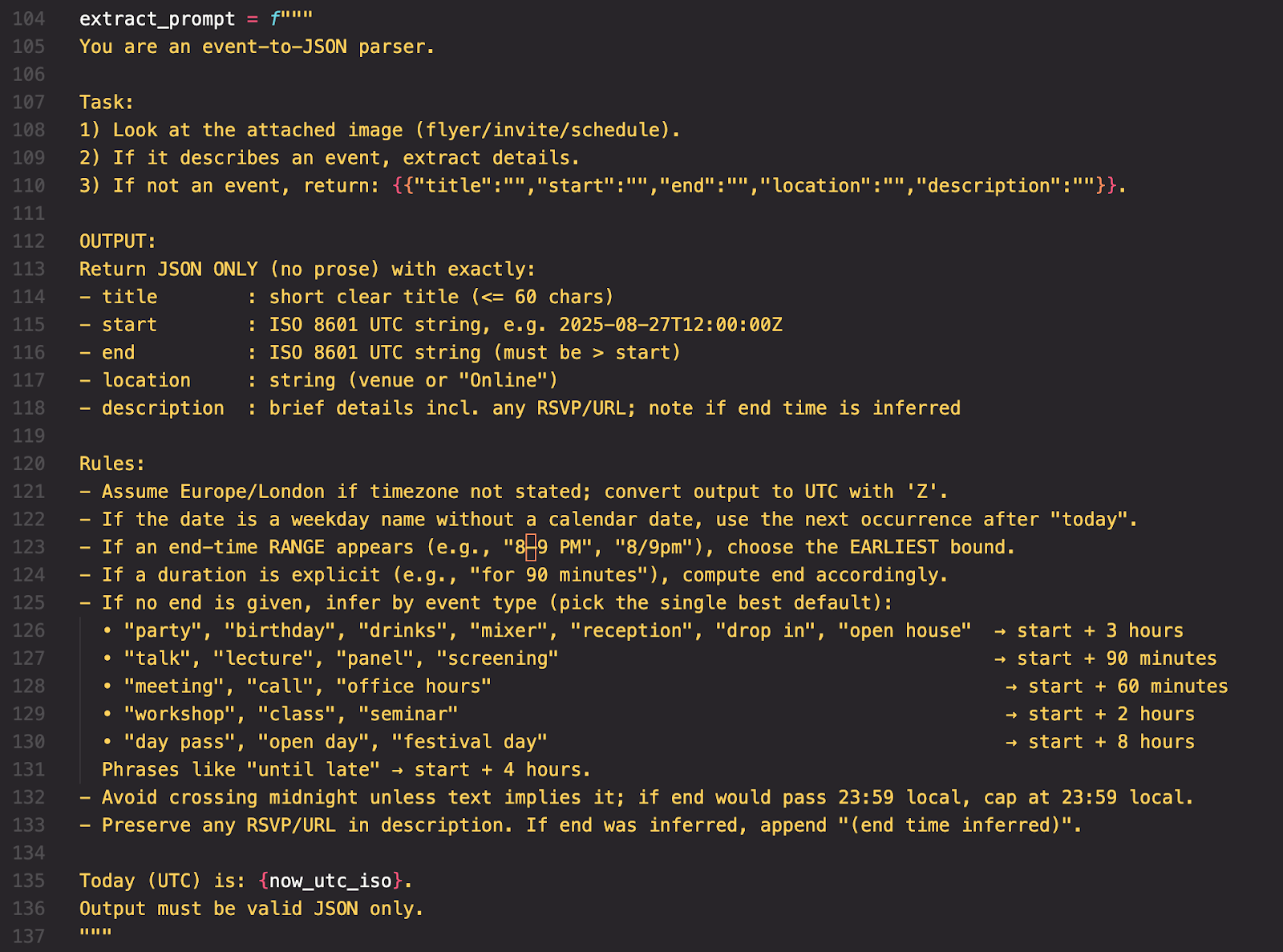

Below is our extract_prompt. We’ll pass this prompt to the OpenAI API. It tells OpenAI exactly what to do by defining the task, output format, and rules it has to follow. This will guide the model to extract only the key details from the screenshot, make reasonable assumptions when information is missing (like an event’s end time), and return everything in a clean, structured JSON format.

This structured output is what we will pass along to our Google Script for creating a calendar event!

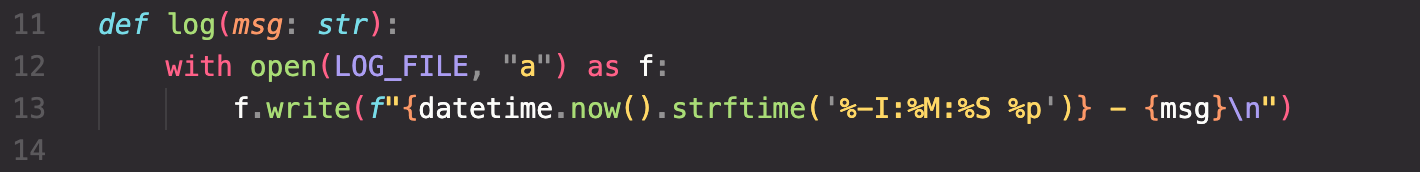

Remember, logging is important! This is a simple helper function. This does the same thing as the helper function we saw in screenshot_agent.py - it appends a message to our logs (screenshot_agent.log) that includes the current time and a message. This function will be invoked throughout our extract_event.py to log the process of communicating with the OpenAI API.

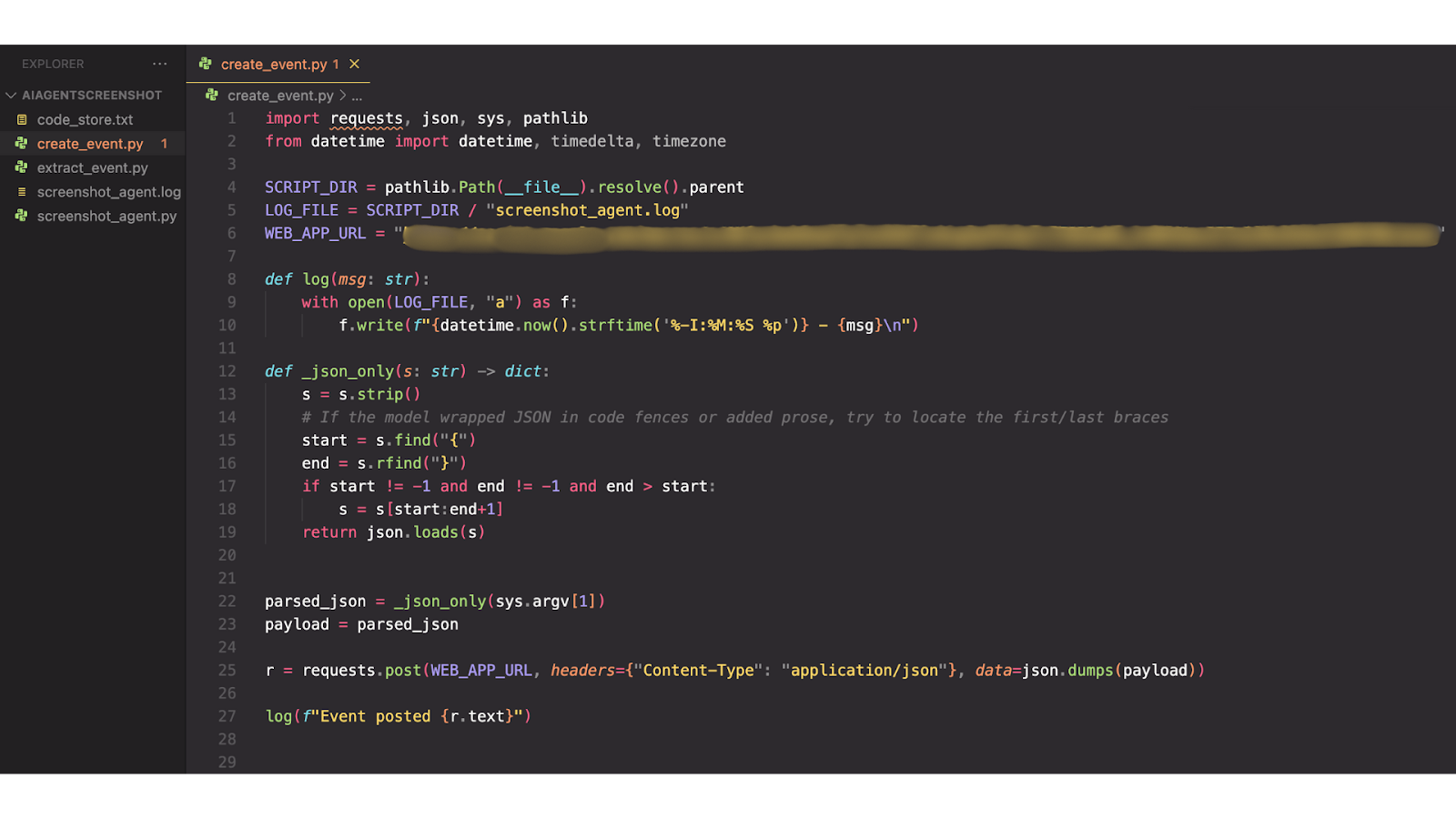

Creating the Calendar Event: We’ll pass our structured JSON data to a Google Apps Script endpoint that uses the Google Calendar API to automatically create an event.

Note: Just as our OpenAI API key should be stored in a secure environment variable, our WEB_APP_URL (which connects the app to Google Apps Script) should also be kept safe instead of hard-coded in the script.

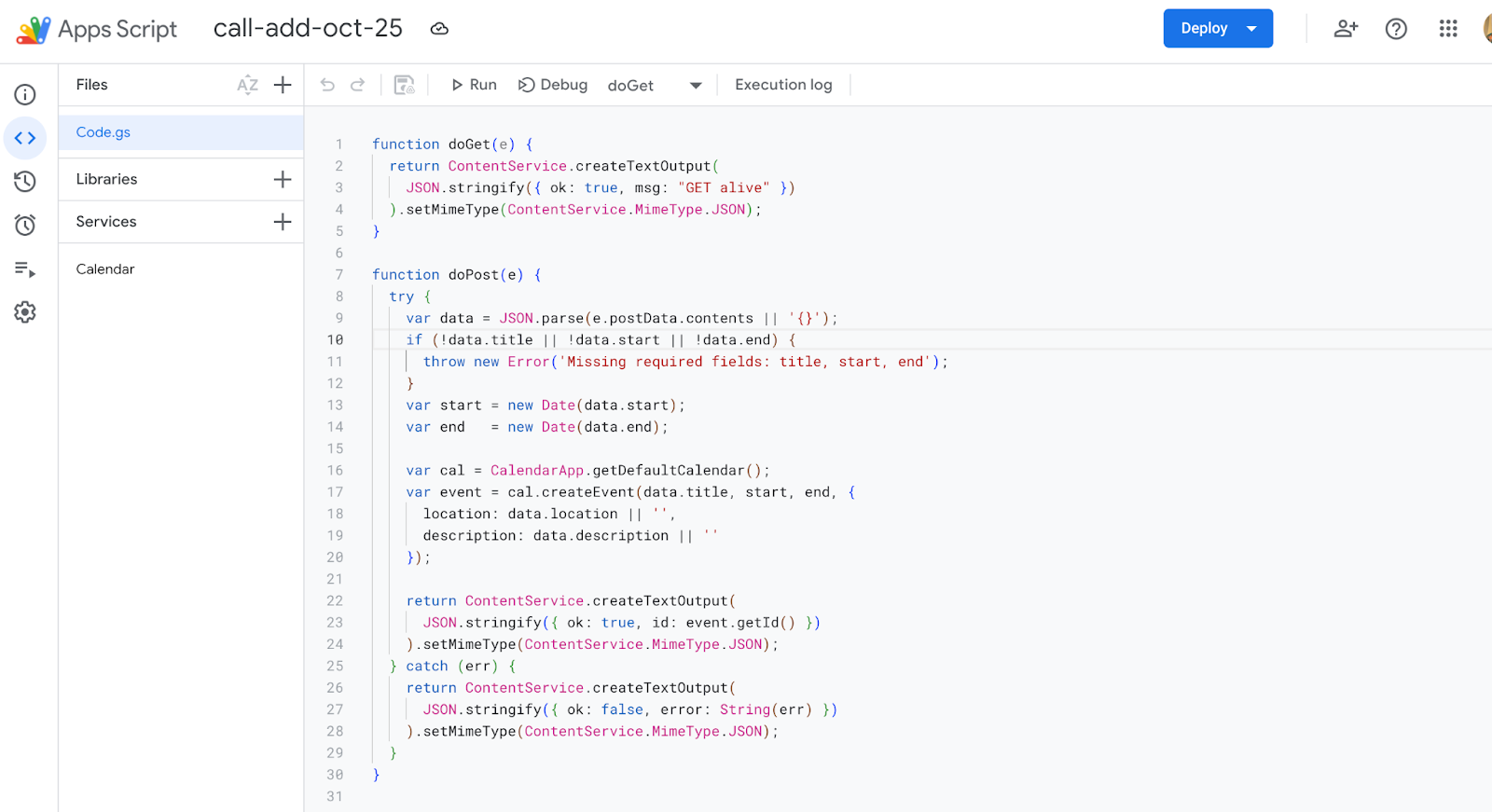

The final link in our Agent’s chain is the Google App’s Script. This will do two main things:

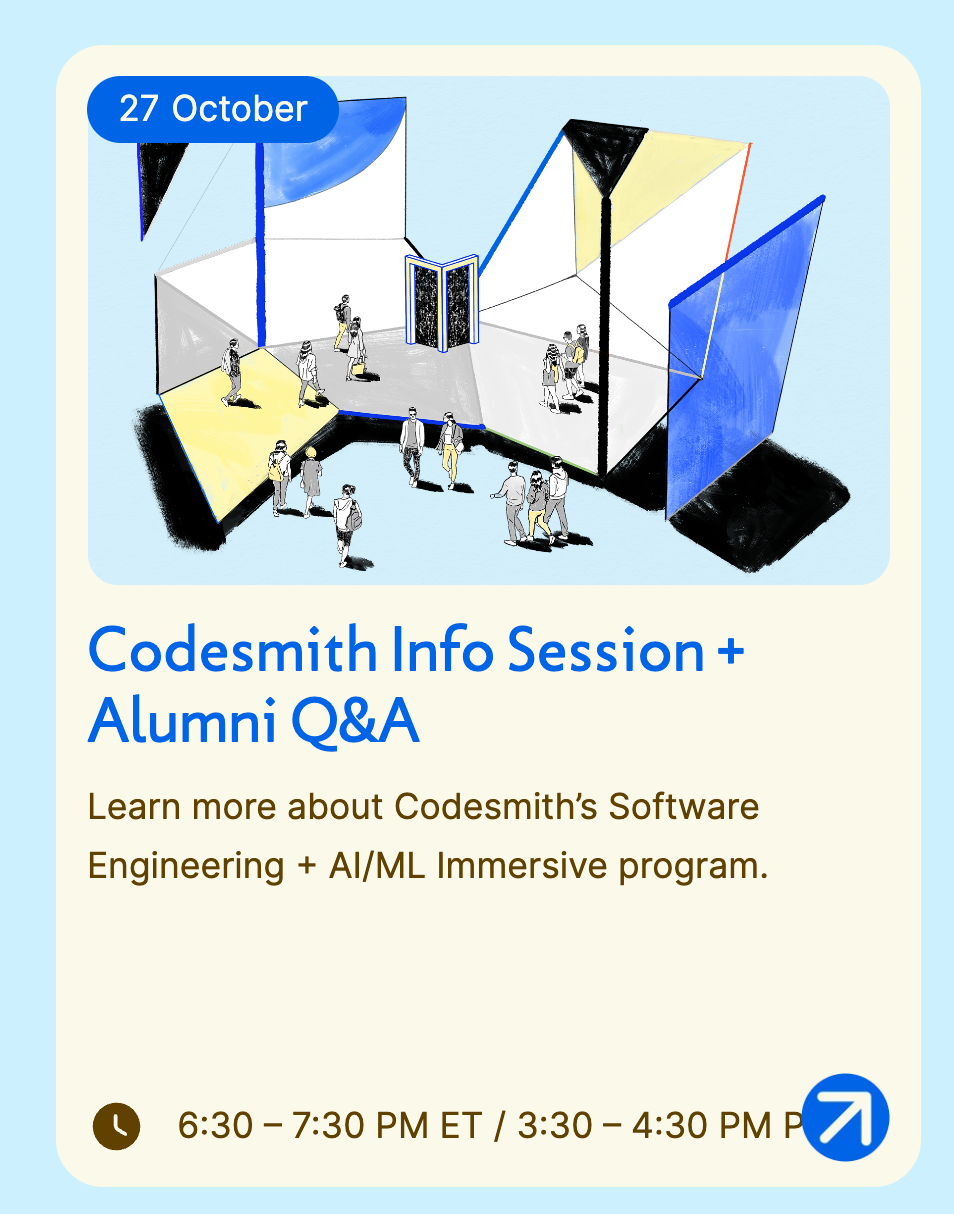

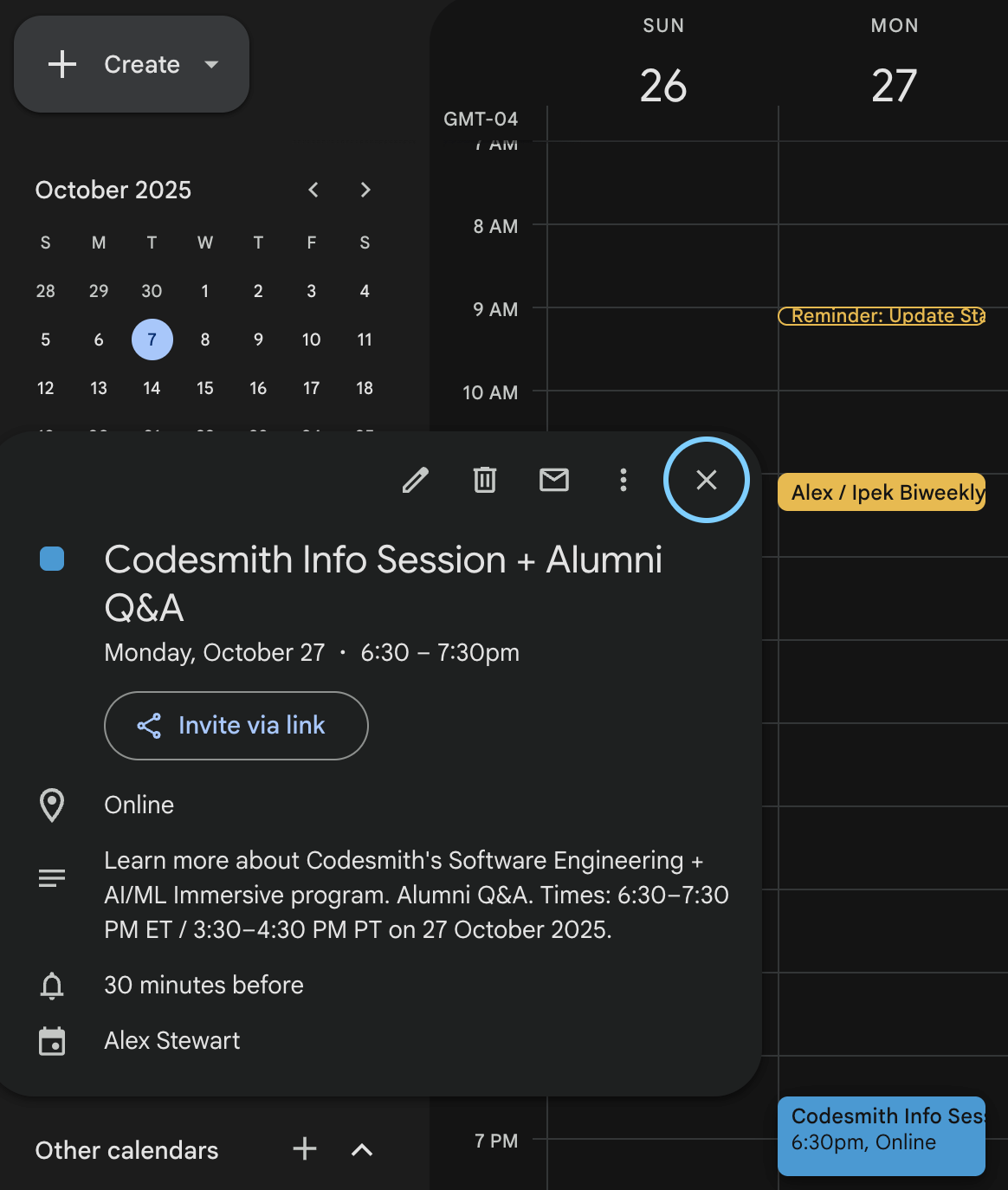

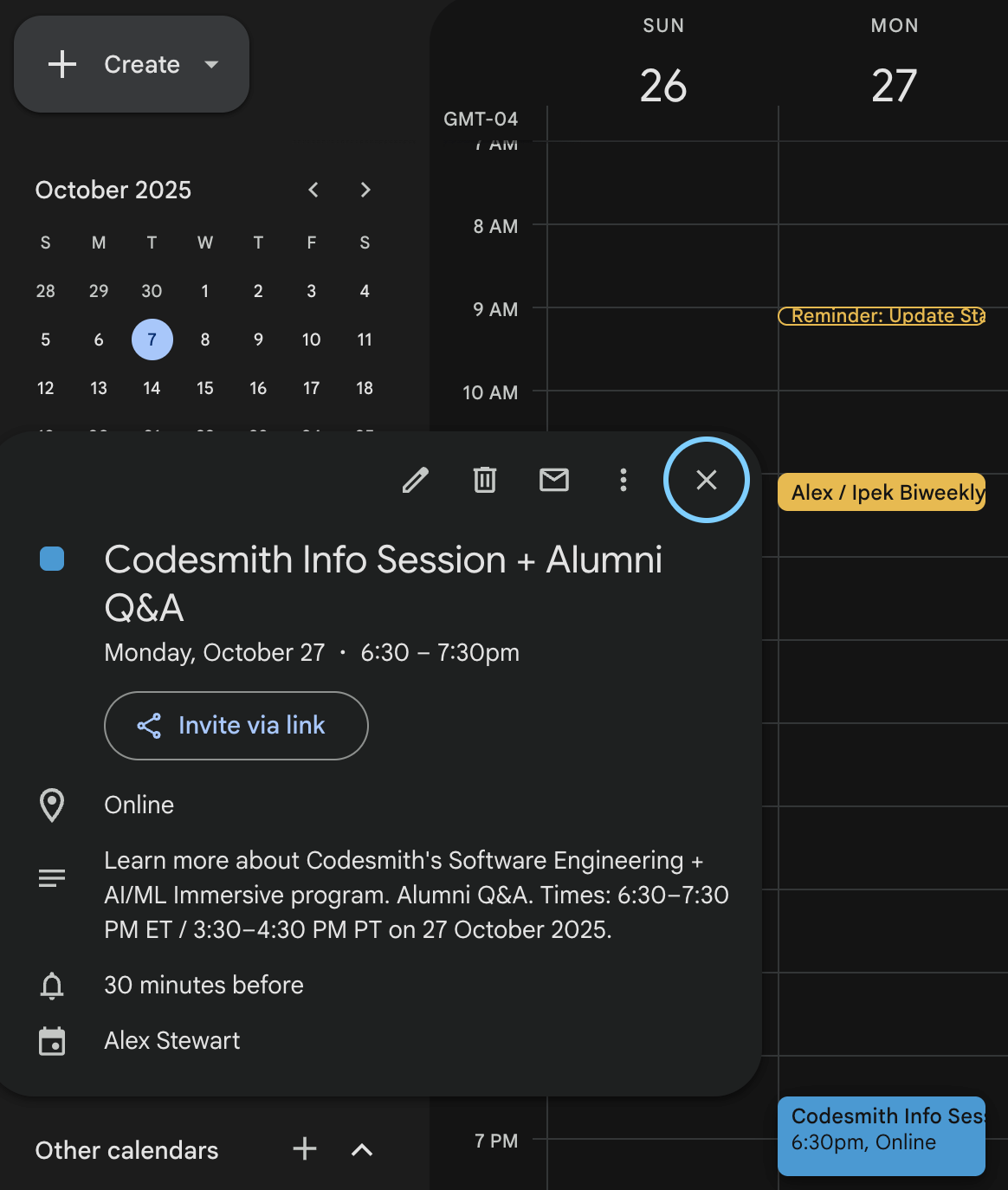

Testing the Full Workflow: Finally, we’ll see all the pieces come together! We will take a screenshot of an event on codesmith.io and see a brand-new calendar event appear in Google Calendar which will all get logged step-by-step in screenshot_agent.log.

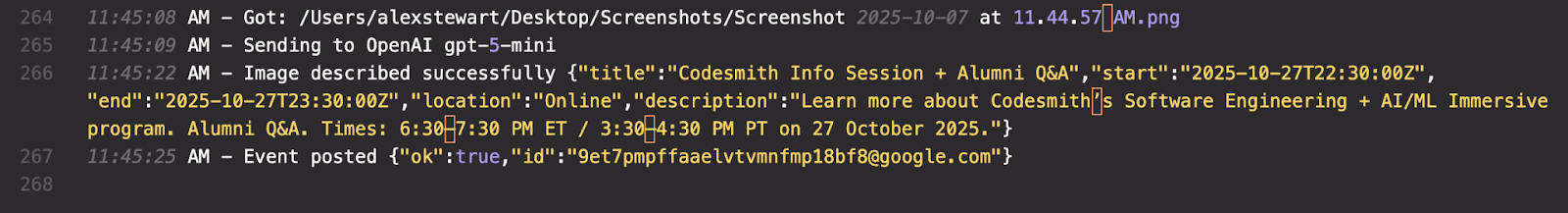

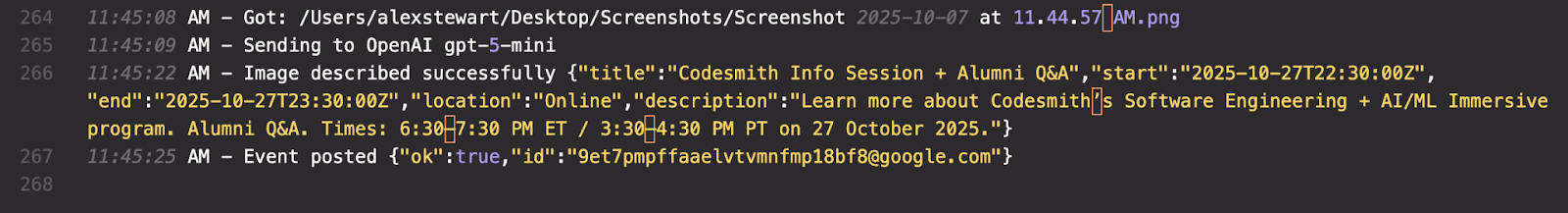

And, below we see each step in our workflow reflected in our screenshot_agent.log:

And now the moment of truth, the event shows up in Google Calendar!

And, below we see each step in our workflow reflected in our screenshot_agent.log:

And now the moment of truth, the event shows up in Google Calendar!

By now, you should have a high-level understanding of how we leveraged our Automator, the OpenAI API, Google Apps Script, and a logging system to create a working AI Agent! To get a more in-depth understanding of this process, be sure to check out my workshop, “Build Your First AI Agent” .

To follow along using the source code, check the Github repository.

An AI agent is not a toy. It’s a distributed system that requires architecture, resilience, and care. Start small: wire up the four-step loop (input → reasoning → action → output). Then add logging, error handling, and a couple of tools. From there, you can expand into frameworks, private models, and advanced automation.

The key is to remember: the LLM is the brain, not the whole system. The agent comes alive when you connect reasoning, tools, and feedback into a loop that can actually get things done. That’s how you build an AI agent. Not just a chatbot, but a system that thinks, acts, and adapts.

Check our free events to join more of our practical workshops.

Explore CS Prep further in our beginner-friendly program.

Get more free resources and access to coding events every 2 weeks.

Connect with one of our graduates/recruiters.

Our graduates/recruiters work at:

Will Sentance is the co-founder of Codesmith, where he’s driven the mission to equip diverse learners with the skills and mental models to thrive in software and AI.

Connect with one of our recruiters to learn about their journeys.

Our graduates/recruiters work at: