How AI Learns (Part 2): Deep Learning

%20(1).png)

%20(1).png)

How is Artificial Intelligence getting so much smarter in such a short period of time? Recall that machine learning (ML) is a branch of AI that empowers computers to learn without being explicitly programmed. Deep learning is a sub-branch of ML based on multilayer neural networks, allowing computers to model complex patterns and data. In this article, we will dive into some core concepts and architectures of deep learning (a sub-branch of machine learning) that enable advanced learning by machines.

Algo-r-(h)-i-(y)-thms, 2018 art installation. Source: Photo by Alina Grubnyak on Unsplash (accessed 08/21/2025)

Data is crucial in deep learning because it is used to train the models. We can distinguish data in multiple ways.

Data can be segregated based on representation:

Data can be typed by structure:

Data can be differentiated by the use of labels:

Raw data is rarely used for the actual training. Conversion takes place to turn raw data into useful feature vectors, which contain the characteristics of the data points.

Embedding is one encoding technique that works well for unstructured data and categorical data. It is a vector representation capturing semantic meaning. Embeddings can be compared to assess similarity and identify relationships.

A diagram showing vector data. Source: Pinecone The Rise of Vector Data (accessed 08/21/2025)

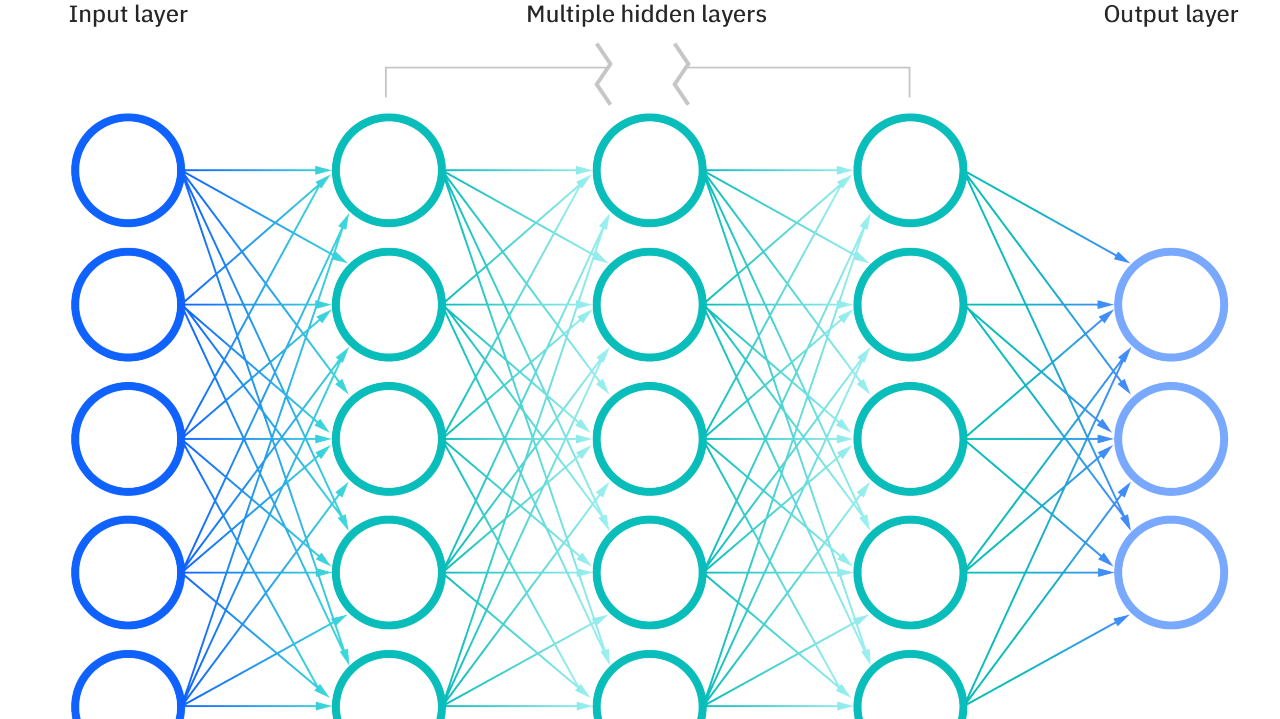

You often hear "deep learning" and "neural network" together. They are related, but separate concepts. A neural network is a type of machine learning model, or architecture, inspired by the circuits of neurons in the human brain. You can visualize a neural network as a fully interconnected, directed graph structure organized by layers. A deep learning model is simply a neural network composed of more than three layers.

A visual representation of the layers in a neural network. Source: https://www.ibm.com/topics/neural-networks (accessed 08/21/2025)

The following are key components in a neural network:

A diagram of a neuron and its components. Source: https://www.codecademy.com/article/understanding-neural-networks-and-their-components (accessed 08/21/2025)

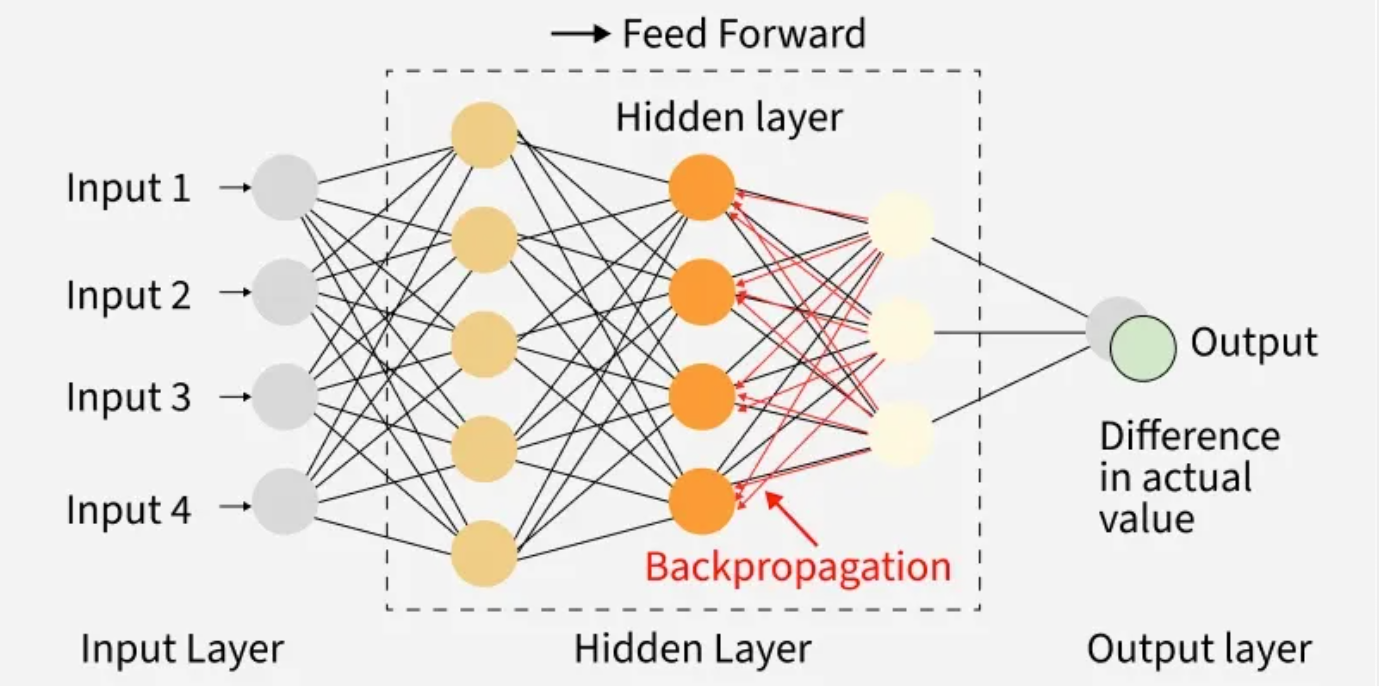

A neural network diagram with feedforward and backpropagation. Source: https://www.geeksforgeeks.org/artificial-intelligence/artificial-neural-networks-and-its-applications/ (accessed 08/21/2025)

Initially, all of a neural network’s weights and biases are set to random values. As training data is successively fed through, the weights and biases are continuously adjusted automatically until the neural network produces expected outputs based on provided inputs. This process requires trial and error over time, similar to the human learning process.

The concept of gradient stems from mathematics, representing the rate and direction of change of a function. It is a vector pointing in the direction where the function decreases or increases most rapidly. In machine learning, the gradient indicates how to change model parameters to most efficiently decrease error or increase reward.

Gradient descent is commonly used during backpropagation in deep learning models. It is a fundamental optimization algorithm to iteratively adjust the model's parameters (e.g., coefficients in linear regression or weights in a neural network) that result in the lowest possible error. A loss function is a mathematical formula that measures the difference (or error) between the predicted value and the actual value for a single data point. A cost function aggregates the loss values across the entire training dataset, resulting in a single overall measure of performance.

Hyperparameters are configuration settings that are set before model training. Unlike model parameters, which are learned from the training data, hyperparameters are defined externally to control different aspects of the learning process and model architecture.

Some hyperparameters include:

The goal of hyperparameter tuning is to find the optimal combination of these hyperparameters that results in the best predictive accuracy, generalization, and efficiency of the deep learning model. Proper tuning reduces the issues of underfitting (inability to make accurate predictions) and overfitting (inability to generalize for new data). The tuning process can be computationally intensive, but it can enhance the model's overall performance (accuracy and consistency).

Some hyperparameter tuning techniques include:

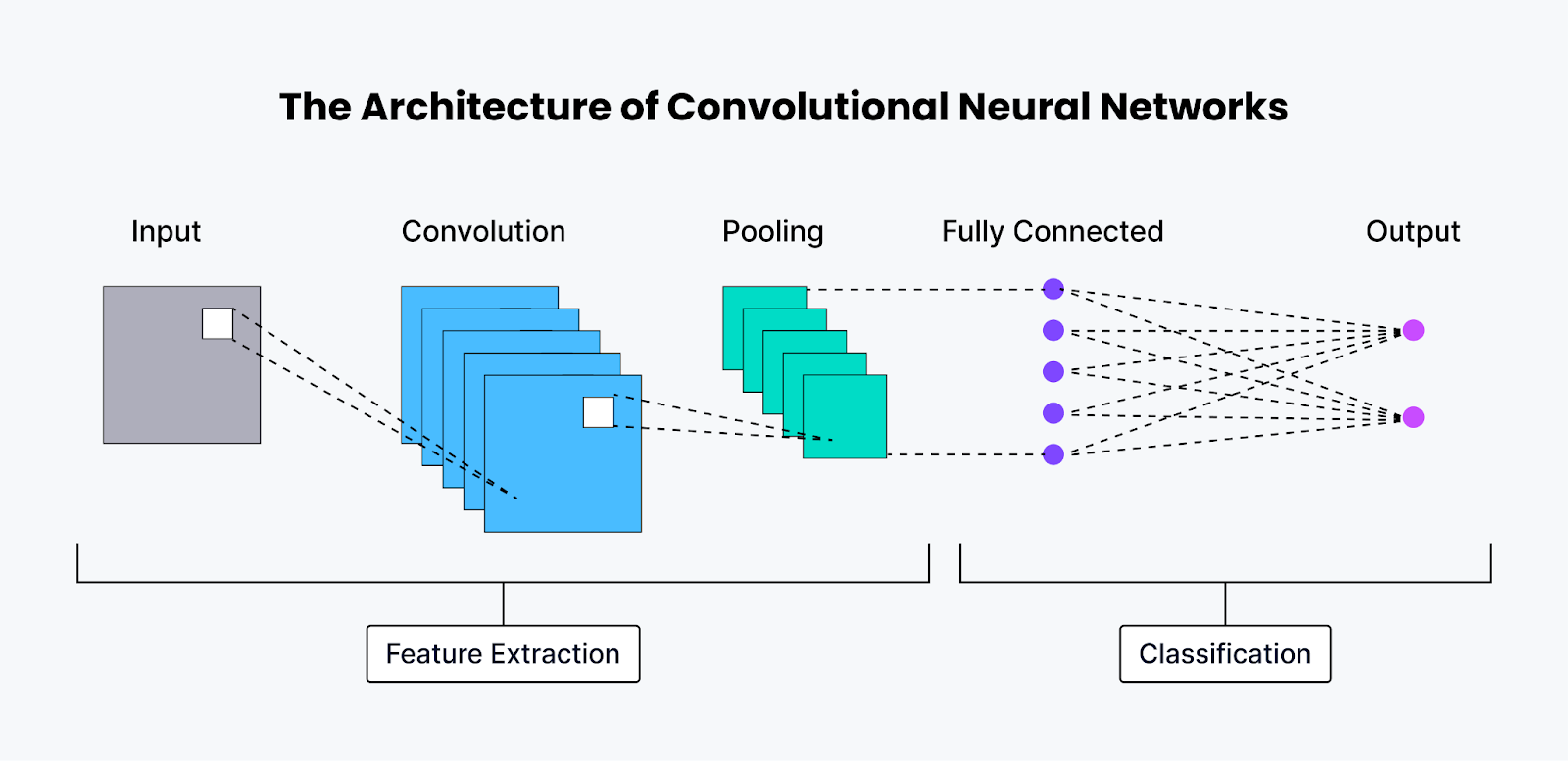

Introduced in 1989, Convolutional Neural Networks (CNNs) are inspired by the human visual system, excelling at classification and computer vision tasks (e.g., image classification and object detection). They can be computationally intensive, needing graphical processing units (GPUs) for training.

CNNs follow a multilayered architecture that increases in complexity. The earlier layers identify basic visual features, such as edges and colors. The latter layers focus on more complex, abstract visual concepts, such as shapes and objects.

A diagram of CNN architecture. Source: https://zilliz.com/glossary/convolutional-neural-network (accessed 08/21/2025)

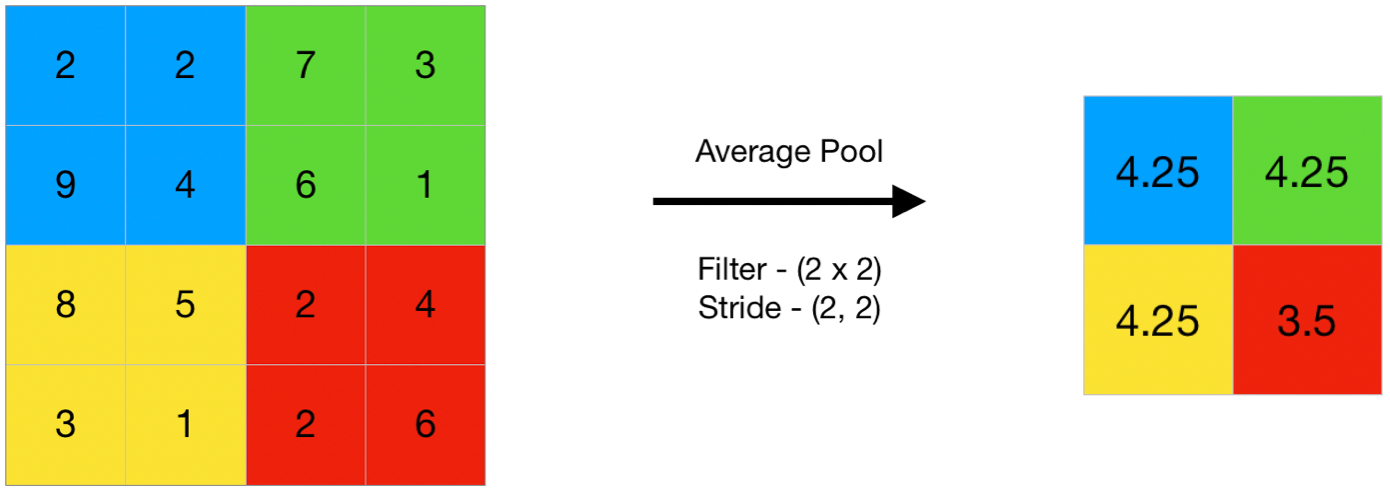

Aside from the input and output layers, there are three main types of hidden layers (from earlier to latter):

An illustration of max pooling. Source: https://www.geeksforgeeks.org/deep-learning/cnn-introduction-to-pooling-layer/ (accessed 08/21/2025)

An illustration of average pooling. Source: https://www.geeksforgeeks.org/deep-learning/cnn-introduction-to-pooling-layer/ (accessed 08/21/2025)

CNNs allow for a more scalable approach to computer vision tasks due to automatic feature extraction, learning relevant visual features directly from the raw data. Previously, manual feature extraction methods were used for image classification and object recognition. CNNs lay the foundation for advances in object detection, facial recognition, video analysis, and medical imaging.

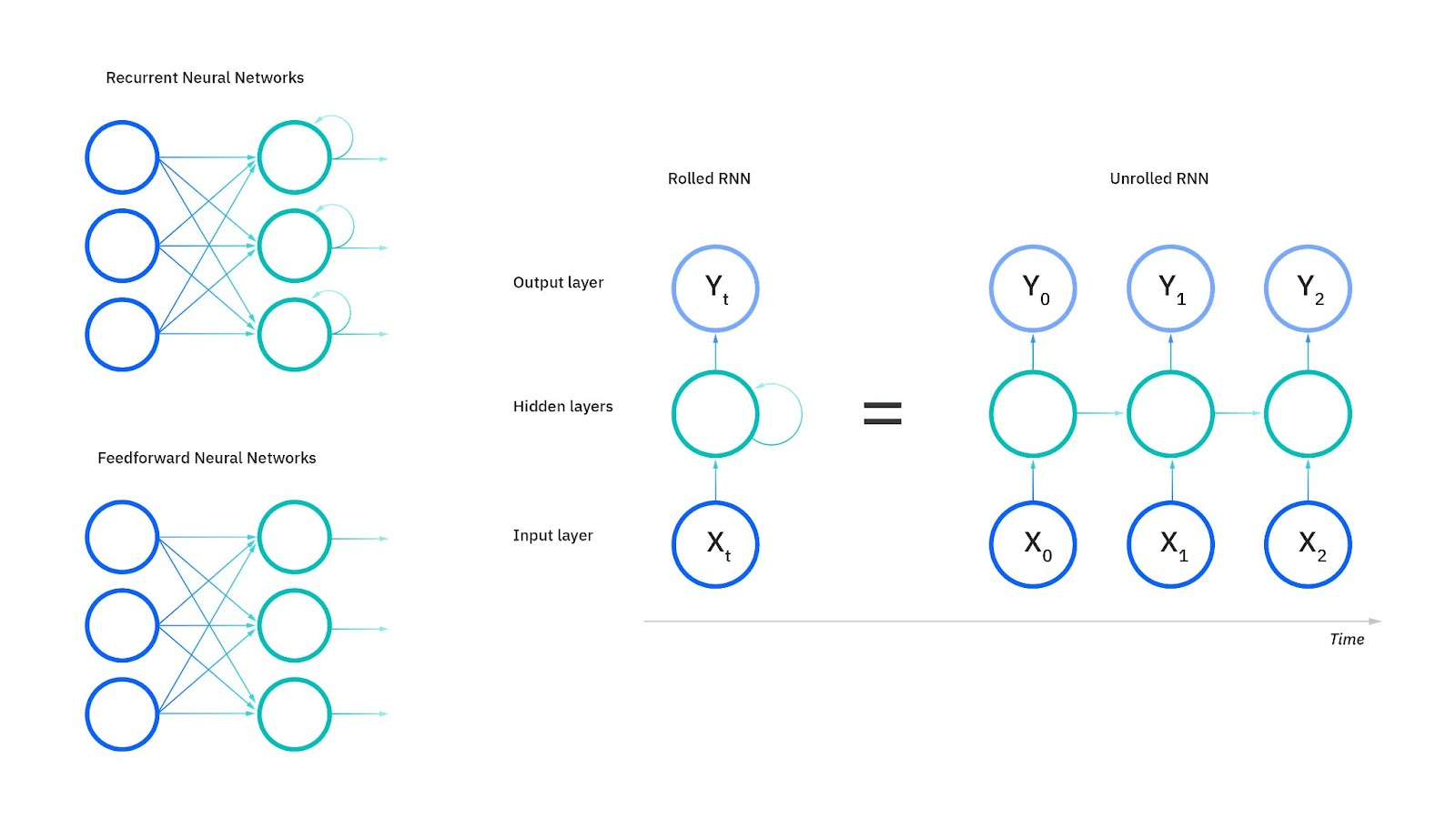

Introduced in the early 1980s, Recurrent Neural Networks (RNNs) are trained on sequential or time series data. Unlike traditional deep neural networks, which process input data independently, RNNs have a memory mechanism that remembers information from previous inputs. Hence, they suit tasks that deal with ordered data (e.g., natural language processing for language translation) or make sequential predictions (e.g., predicting rain levels based on past daily weather information).

Recurrent Neural Networks vs. Feedforward Neural Networks. Source: https://www.ibm.com/think/topics/recurrent-neural-networks (accessed 08/21/2025)

The following are the core layers:

Unlike traditional deep neural networks, RNNs share the same weights among all nodes within each layer of the network. This allows for model efficiency, handling sequences of arbitrary length without escalating the number of weights that need to be learned. Additionally, this allows for consistency, as RNNs apply the same transformation to the input at each step.

Due to the sequential nature, RNNs use Backpropagation Through Time (BPTT), an extension of the standard backpropagation. BPTT updates weights based on the current step and all prior steps, essentially unrolling the network over time.

There are multiple ways to configure RNNs:

However, RNNs are prone to exploding and vanishing gradient issues. Exploding gradient occurs when the gradient approaches infinity exponentially fast, causing the model to behave erratically such as overfitting. On the other hand, vanishing gradient happens when the gradient approaches zero exponentially fast, leaving the weights unadjusted and resulting in underfitting. Furthermore, training RNNs demands significant computational power, memory, and time due to the processing of sequential data in a serialized manner.

These limitations have resulted in the decline of RNNs and the rise of transformers, which are parallelized and better capture long-range dependencies.

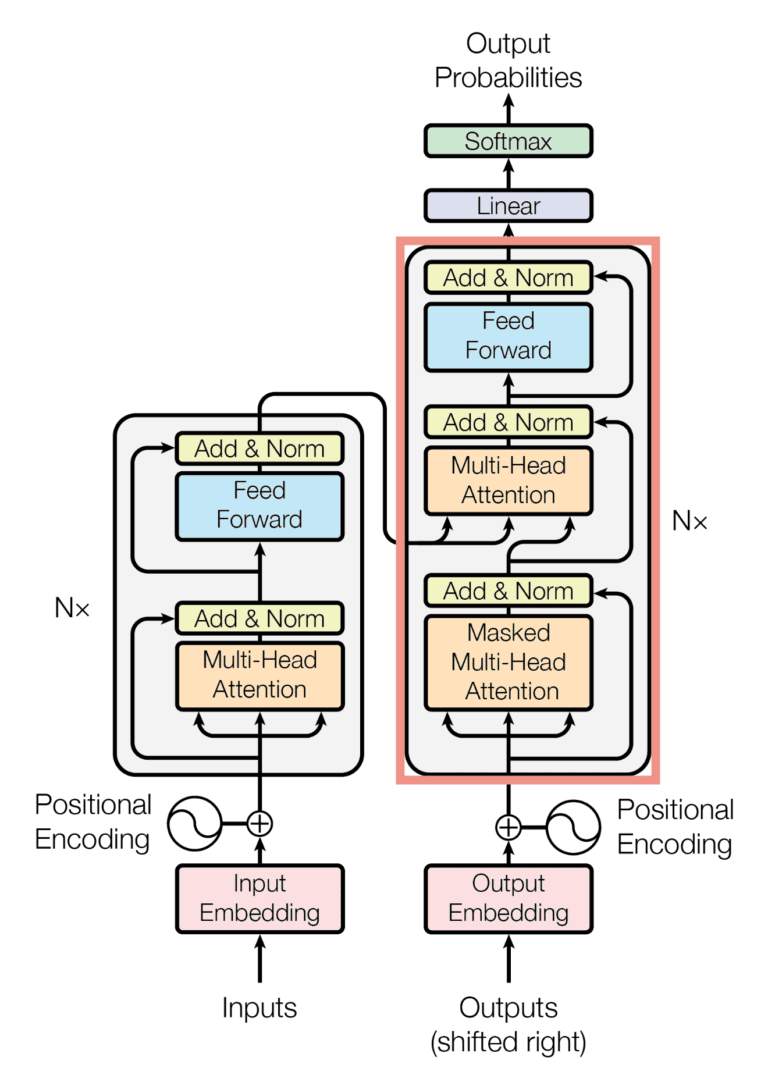

Introduced in 2017, the transformer deep learning architecture enables processing of sequential input data in a non-serialized manner. Transformers combine the encoder-decoder setup with the concept of “self-attention.” This self-attention mechanism allows the entire input sequence to be processed simultaneously, increasing model efficiency with parallelization and capacity for understanding long-range dependencies.

A diagram of the transformer architecture with encoder on the left and decoder on the right. Source: https://arxiv.org/abs/1706.03762 (accessed 08/21/2025)

Here's an overview of the main blocks and layers:

Transformers overcome the gradient issues faced by RNNs with parallelization, avoiding the backpropagation limitations. Optimized for parallel computing, transformers can leverage the capability of graphic processing units (GPUs) to handle a massive amount of data and perform complex tasks.

Transformers can be trained on different types of sequential data, such as human and programming languages, music, and even DNA sequences. However, transformers are most known for performing natural language processing (NLP) tasks, such as translation and summarization. There are also vision transformers (ViTs) that adapt the transformer architecture to process image data, which is not inherently sequential, with the workaround of patch embeddings.

Deep neural networks allow computers to understand complex data and patterns. Through feedforward computation and backpropagation, these deep learning models automatically learn from the training data by continuously adjusting their weights and biases until they can accurately predict outputs from inputs. They also utilize activation functions to inject nonlinearity and better mimic complex, real-world scenarios.

Different deep learning architectures excel at specific tasks. Convolutional Neural Networks (CNNs) automatically extract visual features for computer vision tasks. Recurrent Neural Networks (RNNs) use a memory mechanism to process sequential data. Transformers utilize a self-attention mechanism to process an entire sequence simultaneously with parallel computing capability.

Explore CS Prep further in our beginner-friendly program.

Get more free resources and access to coding events every 2 weeks.

Connect with one of our graduates/recruiters.

Our graduates/recruiters work at:

Diana Cheung (ex-LinkedIn software engineer, USC MBA, and Codesmith alum) is a technical writer on technology and business. She is an avid learner and has a soft spot for tea and meows.

Connect with one of our recruiters to learn about their journeys.

Our graduates/recruiters work at: